I talked a lot of big talk in my last post about the powers of WebGL, so here's my first stable proof-of-concept for a lot of things I've been working on:

http://www.unorthodox.com.au/astromech/jarvis.01/

A friend of mine called it my "JARVIS Interface", and she has a point.

WASD to move Doom-wise. Arrow keys and mouse drag to spin. Scroll wheel to change field-of-view. You'll get the hang of it.

It's pretty indecipherable, but old hands will recognize real-time 2D fourier transforms, velocity (convolution) maps, and some primitive image stacking. The right-hand bunch of panels is a first, failing, attempt to use convolution to seek out the target image (saturn) in the video feed.

That part isn't working. Yet. It's basically a spectacular fail. It's just a snapshot of fail #103, which failed a little less hard than #102, in Iron Man progressive montage style.

In fact, if you don't have the right combination of browser and 3D card drivers to make it all go, it will silently error and break and you'll get some very uninteresting "flatline" displays. Check the console log.

So, there you have it. Real-time 30fps 512x512 2D fast-fourier transforms and convolutions, even though the math is wrong. (i'm normalizing my crosspower wrongly. Working on it now.)

Alas I'll be busy for a few days more, but it was time to update the internet, and see if anyone is interested in where I'm going.

Friday, June 21, 2013

Thursday, June 13, 2013

And the winner is... GL!

For nearly my entire career, Microsoft has been trying to kill OpenGL and replace it with DirectX. I've never been entirely sure why. They used to support it, (the first-gen Windows NT drivers were pretty good) but then they got struck by the DirectX thunderbolt, and decided they weren't going to use an open standard already implemented by the.biggest names in graphics, they were going to make their own.

There was even a period in the early 2000's where it looked like DirectX might win, because it really did perform better on that generation of hardware. Perhaps because of the high degree of involvement Microsoft had with those vendors. Features were enabled for DirectX that took months or years to appear as OpenGL extensions, for no obvious reason. It was a symbiotic relationship that sold a lot of 'certified' graphics cards and copies of Windows. If it hadn't been for Quake III Arena, OpenGL probably would have died on windows entirely. (All hail the great John Carmack.)

But then, oh then, there was the great Silverlight debacle. I don't think there's any concise way to explain that big ball of disappointment. Perhaps there are internal corporate projects that have real-time media streams and 3D models zooming all over, but on the web it was a near-complete fail. A lot of people put their trust in Microsoft that it would be cross-platform enough to replace Flash. A lot of code got written for that theoretical future, and then it only properly worked on IE.

Here's the secret about web standards, and HTML5 in particular... they lie dormant until some critical 'ubiquity' percentage is passed, and then they explode and are everywhere. Good web developers want their site to work on all browsers, but they also want to use the coolest stuff. Therefore they use the coolest stuff which is supported by all browsers.

Of course, "All Browsers" is a stupidly wide range, so really a metric like "95-98% of all our visitors" is what gets used. So once a technology is available in 95% of people browsers, that's the tipping point.

Silverlight didn't make it, because it didn't properly work on Linux or Mac. Or even most other Windows browsers. Flash used to enjoy this ubiquity, but Apple stopped that when it hired bouncers to keep Adobe out of iOS.

But the pressure kept building for access to the 3D card from inside a browser window. 3D CSS transforms meant the browser itself was positioning DIV elements in 3D space (and using the 3D card for compositing) and this deepened the ties between the browser and the GPU. Microsoft had already put all it's eggs in the Silverlight basket, but everyone else wanted an open standard that could be incorporated quickly into HTML without too much trouble. There really wasn't any choice.

In a couple of hours, a delivery person should be dropping off a shiny new Google Nexus smartphone. If you run a special debug command, WebGL gets activated. On iOS, the WebKit component will do WebGL in certain modes (ads) but not in the actual browser. The hardware is already there (3D chips drive the display of many smartphones) it's just a matter of APIs.

WebGL's "Ubiquity" number is already higher than any equivalent 3D API. The open-ness of the standard guaranteed that. And as GPUs get more powerful, they look more and more like the Silicon Graphics Renderstations that OpenGL was written on, and less like the 16-bit Voodoo cards that DirectX was optimized for. OpenGL has been accused of being "too abstract", but that feature is now an advantage because of the range of hardware it runs on.

Lastly, WebGL is OpenGL-ES. That suffix makes a lot of difference. The 'ES' standard is something of a novelty in the world of standards... it's a reduction of the previous API set. Any method that performed an action that was a subset of another method was removed. So all the 'simple' calls went away, and left only the 'complex' versions. This might sound odd, but it's a stroke of genius. Code duplication is removed. There are less API calls to test and debug. And you _know_ the complex methods work consistently (aren't just an afterthought) because they're the only way to get everything done.

Rebuilding a 'simple' API of your own on top of the 'complex' underlying WebGL API is obviously the first thing everyone does. That's fine. There's a dozen approaches for how to do that.

In short, WebGL is like opening a box of 'Original' LEGO, when you just had four kinds of brick in as many colours. You can make anything with enough of those bricks. Anything in your imagination. Sure, the modern LEGO has pre-made spaceship parts that just snap together, but where's the creativity? "Easy" is not the same as "Better".

There will quickly come the WebGL equivalent of JQuery. Now that the channel to the hardware is open, we can pretty up the Javascript end with nice libraries. (Mine is coming along, although optimized in the 'GPU math' direction which treats textures as large variables in equation) There are efforts like "three.js" which make WebGL just one renderer type (although the best) under a generic 3D API that also works cross-platform on VRML and Canvas. (Although you have less features, to maintain compatibility)

The point is, while the WebGL API is a bit of a headspin (and contains some severe rocket science in the form of homogeneous co-ordinates and projective matrix transforms) you almost certainly won't have to use it directly. The ink on the standard is barely dry, and there are at least five independant projects to create complete modeler/game engines. Very shortly we will be 'drawing" 3D web pages and pressing the publish button. The protypes are already done, and they work.

There was even a period in the early 2000's where it looked like DirectX might win, because it really did perform better on that generation of hardware. Perhaps because of the high degree of involvement Microsoft had with those vendors. Features were enabled for DirectX that took months or years to appear as OpenGL extensions, for no obvious reason. It was a symbiotic relationship that sold a lot of 'certified' graphics cards and copies of Windows. If it hadn't been for Quake III Arena, OpenGL probably would have died on windows entirely. (All hail the great John Carmack.)

But then, oh then, there was the great Silverlight debacle. I don't think there's any concise way to explain that big ball of disappointment. Perhaps there are internal corporate projects that have real-time media streams and 3D models zooming all over, but on the web it was a near-complete fail. A lot of people put their trust in Microsoft that it would be cross-platform enough to replace Flash. A lot of code got written for that theoretical future, and then it only properly worked on IE.

Here's the secret about web standards, and HTML5 in particular... they lie dormant until some critical 'ubiquity' percentage is passed, and then they explode and are everywhere. Good web developers want their site to work on all browsers, but they also want to use the coolest stuff. Therefore they use the coolest stuff which is supported by all browsers.

Of course, "All Browsers" is a stupidly wide range, so really a metric like "95-98% of all our visitors" is what gets used. So once a technology is available in 95% of people browsers, that's the tipping point.

Silverlight didn't make it, because it didn't properly work on Linux or Mac. Or even most other Windows browsers. Flash used to enjoy this ubiquity, but Apple stopped that when it hired bouncers to keep Adobe out of iOS.

But the pressure kept building for access to the 3D card from inside a browser window. 3D CSS transforms meant the browser itself was positioning DIV elements in 3D space (and using the 3D card for compositing) and this deepened the ties between the browser and the GPU. Microsoft had already put all it's eggs in the Silverlight basket, but everyone else wanted an open standard that could be incorporated quickly into HTML without too much trouble. There really wasn't any choice.

In a couple of hours, a delivery person should be dropping off a shiny new Google Nexus smartphone. If you run a special debug command, WebGL gets activated. On iOS, the WebKit component will do WebGL in certain modes (ads) but not in the actual browser. The hardware is already there (3D chips drive the display of many smartphones) it's just a matter of APIs.

WebGL's "Ubiquity" number is already higher than any equivalent 3D API. The open-ness of the standard guaranteed that. And as GPUs get more powerful, they look more and more like the Silicon Graphics Renderstations that OpenGL was written on, and less like the 16-bit Voodoo cards that DirectX was optimized for. OpenGL has been accused of being "too abstract", but that feature is now an advantage because of the range of hardware it runs on.

Lastly, WebGL is OpenGL-ES. That suffix makes a lot of difference. The 'ES' standard is something of a novelty in the world of standards... it's a reduction of the previous API set. Any method that performed an action that was a subset of another method was removed. So all the 'simple' calls went away, and left only the 'complex' versions. This might sound odd, but it's a stroke of genius. Code duplication is removed. There are less API calls to test and debug. And you _know_ the complex methods work consistently (aren't just an afterthought) because they're the only way to get everything done.

Rebuilding a 'simple' API of your own on top of the 'complex' underlying WebGL API is obviously the first thing everyone does. That's fine. There's a dozen approaches for how to do that.

In short, WebGL is like opening a box of 'Original' LEGO, when you just had four kinds of brick in as many colours. You can make anything with enough of those bricks. Anything in your imagination. Sure, the modern LEGO has pre-made spaceship parts that just snap together, but where's the creativity? "Easy" is not the same as "Better".

There will quickly come the WebGL equivalent of JQuery. Now that the channel to the hardware is open, we can pretty up the Javascript end with nice libraries. (Mine is coming along, although optimized in the 'GPU math' direction which treats textures as large variables in equation) There are efforts like "three.js" which make WebGL just one renderer type (although the best) under a generic 3D API that also works cross-platform on VRML and Canvas. (Although you have less features, to maintain compatibility)

The point is, while the WebGL API is a bit of a headspin (and contains some severe rocket science in the form of homogeneous co-ordinates and projective matrix transforms) you almost certainly won't have to use it directly. The ink on the standard is barely dry, and there are at least five independant projects to create complete modeler/game engines. Very shortly we will be 'drawing" 3D web pages and pressing the publish button. The protypes are already done, and they work.

Wednesday, June 5, 2013

The Astrometrics Project

Below is a screenshot of a major success from the other night. It's a proof-of-concept of a very big idea, and will need some explaining, not only because prototypes are notoriously unfriendly and obscure.

It's a web-page, that's clear enough. You have to use your imagination somewhat and realize that the individual box over on the right was a live camera feed from the small astronomical telescope I now have set up in the back yard. It's a webcam video box.

That input image from the webcam is then being thrown into the computational coffee grinder, in the form of Fast Fourier Transforms being implemented in the core of my 3D card's Texture Engine. You can see the original image in the top-left of the 'wall', and it's FFT frequency-analysis directly below it.

The bar graphs are center slices across the 'landscape' of the frequency image above them, because in the frequency domain we're dealing with complex numbers and negative values, which render badly into pixel intensities when debugging.

Doing a 2D FFT in real-time is pretty neat, but that's not all. The FFT from the current frame is compared against the previous frame (shown next to it, although without animation it's hard to see the difference) through a convolution, which basically means multiplying the frames together in a special way, and taking the inverse fourier transform of what you get.

The result of that process is the small dot in the upper right-hand quadrant box. That dot is a measurement of the relative motion of the star from frame to frame. It's a 'targeting lock'. Actually, it's several useful metrics in one:

- It's the 'correspondence map' of how similar both images are when one is shifted by the co-ordinates of the output pixel. So the center pixel is an indication of how they line up exactly, the pixel to the left is an indication of how well if the second image is shifted one pixel to the left, and so on.

- For frames which are entirely shifted by some amount, the 'lock' pixel will shift by the same amount. It becomes a 'frame velocity measurement'.

- If multiple things in the scene are moving, a 'lock' will appear for the velocity of each object, proportional to it's size in the image.

- If the image is blurred by linear motion, the 'lock' pixel is blurred by the same amount. (The "Point Spread Function" of the blur)

- Images which are entirely featureless do not generate a clear lock.

- Images which contain repeating copies of the same thing will have a 'lock' pixel at the location of each repetition.

This is what happens when you 'convolve' two images in the frequency domain. Special tricks are possible which just can't be done per-pixel in the image. You get 'global phase' information about all the pixels, assuming you can think in those terms about images.

Of course you're wondering, "Sure, but what's the framerate on that thing? It's in a web browser." Well, as I type this, it's running in the window behind at 50fps. Full video rate. My CPU is at 14%.

So now you're thinking, "what kind of monster machine do you have that can perform multiple 256-point 2D Fourier transforms and image convolution in real-time, in a browser?" Well, I have an ATI Radeon 7750 graphics card.

Yup, the little one. The low-power version. Doesn't even have a secondary power connector. Gamers would probably laugh at it.

So the next question is, what crazy special pieces of prototype software am I running to do this? None. It's standard Google Chrome under Windows. I did have to make sure my 3D card drivers were completely up to date, if that makes you feel better. Also, three thousand lines of my code.

Most of this stuff probably wouldn't have worked 3-6 months ago... although WebGL is part of the HTML5 spec, it's one of the last pieces to get properly implemented.

In fact, just two days ago Chrome added a shiny new feature which makes life much easier for people with multiple webcams / video sources. (ie; me!) You can just click on the little camera to switch inputs, rather than digging three levels deep in the config menu. So it's trivial to have different windows attached to different cameras now. Thanks, Google!

The HTML5 spec is still lacking when it comes to multiple camera access, but that's less important now. (And probably "by design", since choosing among cameras might not be something you leave to random web pages...)

So, why?

Astromech, Version 0

This is where I should wax lyrical about the majesty of the night sky, the sweeping arc of our galaxy and it's shrouded core. All we need to do is look up, and hard enough, and infinite wonder is arrayed in every direction, to the limits of our sight.

Those limitations can be pretty severe, though. Diffraction effects, Fresnel Limits, the limits of the human eye, CCD thermal noise, analog losses, and transmission errors. Not to mention clouds, wind, dew, and pigeons.

Professional astronomers spend all their days tuning and maintaining their telescopes. But weather is the great leveler which means that a lucky amateur standing in the right field can have a better night than the pros. In fact, the combined capability of the Amateur Astronomy community is known to be greater, in terms of collecting raw photons, than the relatively few major observatories.

Modern-day amateurs have some incredible hardware, such as high-resolution DSLR digital cameras that can can directly trace their ancestry to the first-gen CCDs developed for astronomical use. And the current generation of folded-optics telescopes are also a shock to those used to meter-long refractors.

Everyone is starting to hit the same limits - the atmosphere itself. Astronomy, and Astrophotography are becoming a matter of digital signal processing.

The real challenge is this: how do we take the combined data coming from all the amateur telescopes and 'synthesize' it into a single high-resolution sky map? Why, we would need some kind of web-based software which could access the local video feed and perform some advanced signal processing on it before sending it on through the internet.... ah.

Perhaps now you can see where I'm going with this.

Imagine Google Sky (the other mode of Google Earth) but where you can _see_ the real-time sky being fed from thousands of automated amateur observatories. (plus your contribution) Imagine the internet, crunching its way through that data to discover dots that weren't there before, or that have moved because they're actually comets, or just exploded as supernovae.

My ideas on how to accomplish this are moving fast, as I catch up the latest AI research, but before the synthesis comes data acquisition.

Perhaps one fuzzy star and one fuzzy targeting lock isn't much, but it's a start. I've made a measurement on a star. Astrometrics has occurred.

|

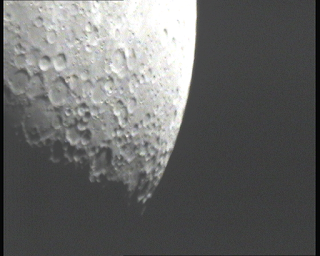

| The Moon, captured with a CCD security camera through a 105mm Maksutov Cassegrain Telescope |

Hopefully before my next shot at clear skies (it was cloudy last night, and today isn't looking any better) I can start pulling some useful metrics out of the convolution. The 'targeting dot' can be analysed to obtain not only the 'frame drift' needed to 'stack' all the frames together (to create high-resolution views of the target) but also to estimate the 'point spread function' needed for deblurring. With a known and accurate PSF, you can reverse the effects of blurring in software.

Yes, there is software that already does this. (RegiStax, Deep Sky Stacket, etc) but they all work in 'off-line' mode which means you have no idea how good your imagery really is until the next day. That's no help when you're in a cold field, looking at a dim battery-saving laptop screen through a car window, trying to figure out if you're still in focus.

Yes, there is software that already does this. (RegiStax, Deep Sky Stacket, etc) but they all work in 'off-line' mode which means you have no idea how good your imagery really is until the next day. That's no help when you're in a cold field, looking at a dim battery-saving laptop screen through a car window, trying to figure out if you're still in focus.

So, a good first job for "Astromech" is to give me some hard numbers on how blurry it thinks the image is.. that's probably today's challenge, although real life beckons with it's paperwork and hassles.

It's fun to be writing software in this kind of 'experimental, explorative' mode, where you're literally not sure if something is possible until you actually try it. I've found myself pulling down a lot of my old Comp Sci. Textbooks with names like "Advanced Image Processing", skipping quickly through to the last chapter, and then being disappointed they end there. In barely a month, I've managed to push myself right up to the leading edge of this topic. I've built software that's almost ready for mass release that's only existed before in research labs. There's every chance it will run on next-gen mobile phones.

This is gonna work.

Subscribe to:

Comments (Atom)