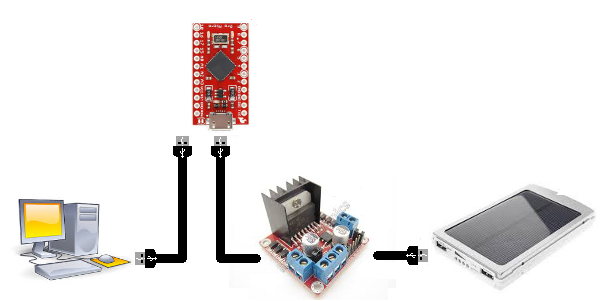

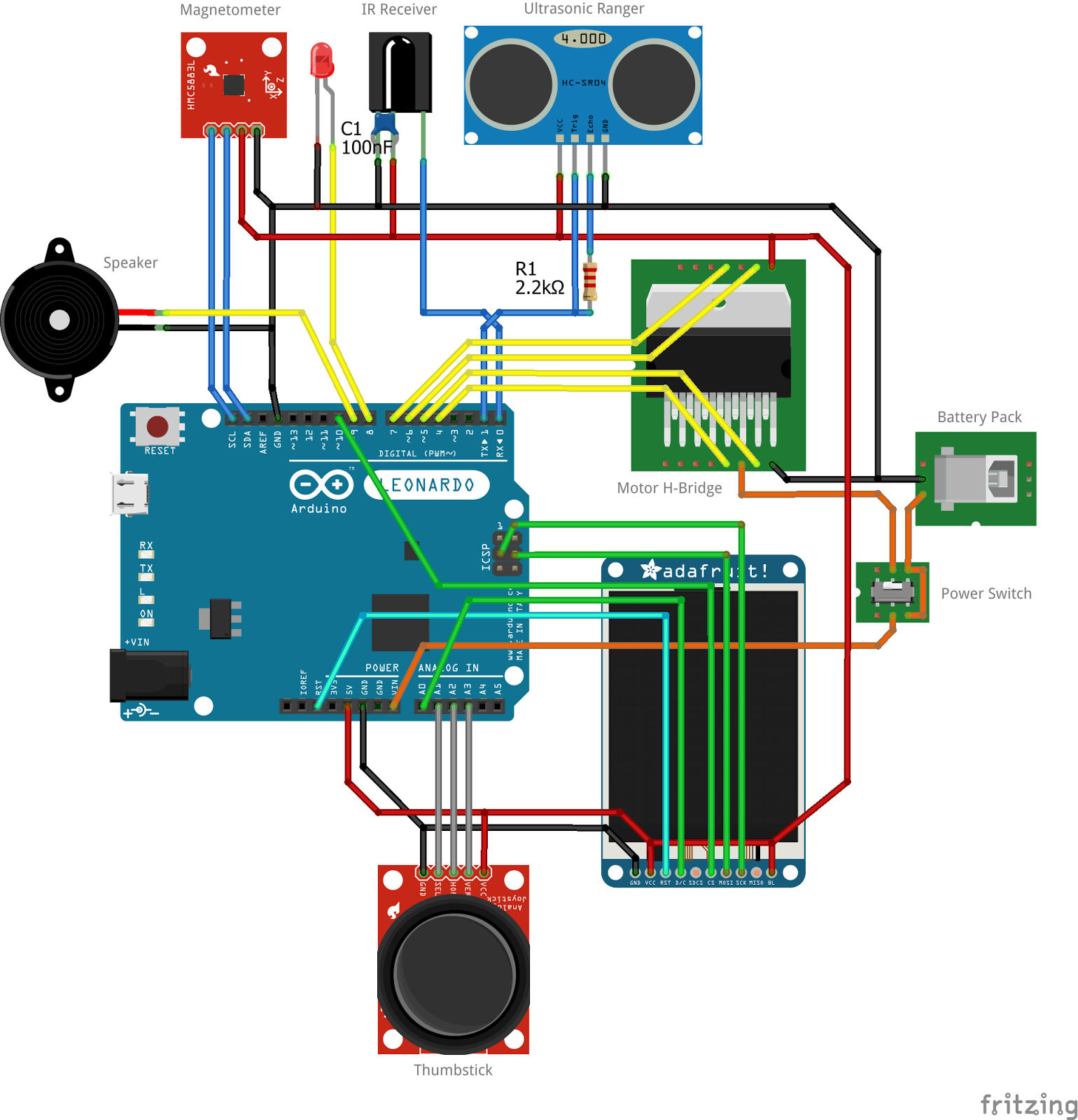

Part of last week's release was a very hacked-together GUI for my programmable droid interface. Here's a couple of screenshots that I've posted before:

Not shown is the thumb-stick that controls the square cursor that you can see near the middle of the screen. The basic features are:

- point-and-click style interface using thumbstick.

- line-oriented layout that favors runs of text symbols

- clicks initiate immediate action, or enter menu mode where moving the joystick will modify the item, after which clicking again will commit the choice.

- redraw manager maintains a list of updated regions, and can be called incrementally by the main loop.

- screens can be 'paged', so that the cursor is either limited to the edge of the screen, or navigates over to a new page, or a different screen.

If you take a look in <unorthodox_droid_raster.h>, you'll find a set of 'Pager' classes that are 'bound' to the Raster classes. (it asks the raster classes to draw things) These are refactors of the older <unorthodox_droid_gfx.h> classes that were bound to the Adafruit GFX library.

I have no intention of 'abstracting' them both - the raster classes are the only ones in use, but the older classes are left as examples and prototypes for people who have already committed to the GFX library, or who perhaps want to port the classes to a third hardware/driver library.

Frankly, the classes aren't really important except as examples. That's going to be the hardest part of this explanation: my 'library' isn't what you expect. There's really not a lot of code there - which is the point. What I have is a way of breaking down the problem so that custom GUIs can be created within the very hard limitations of the Arduino.

Here's a couple of things to consider, issues which make this particular version of the problem different from 'classic' cases:

- Low screen bandwidth - because the screen is usually on the end of a serial link, instead of dual-port SRAM on a video card, simply clearing the screen can take over a second.

- Write-only - the screens I work with have no way to read pixels back from the display.

- Memory mismatch - Ardino total RAM is 2.5K. The bytes needed to display a 24-bit 320x256 image is 245K. The Arduino would need 100 times more RAM to keep a local 'copy' of what is on the screen.

If we assume our UI is made up from some 'fixed' elements and some 'variable' bits, what we're really asking is to create a structure that fits within the tiny 2.5K Arduino RAM, that we can modify, which will somehow be able to repaint that entire 245K screen surface.

There's a name for trying to keep something big in a small space: Compression.

Therefore the real secret to building an Arduino GUI is not about widget toolkits and pen styles, it's going to be about efficiently accessing compressed data.

The moment we have a cursor pointer moving around, we're going to have to redraw little chunks of the screen where it is, and when it leaves. We have to be able to efficiently reach into the structure to redraw line spans when the cursor moves, or text content changes.

Compression is all about context. The briefest recorded telegram conversation consisted of one symbol sent by each participant. The first sent "?", and the second replied "!". In their context it made perfect sense, and couldn't have been clearer. If you haven't heard the story, then it is less so.

So our first layer of compression is to cut down the problem space in a way that 'everybody knows about', but that doesn't limit us too much. Since most of the 'interfaces' that I needed to build were essentially various kinds of configuration menus, and menus are lists of text items, it was pretty clear I could build such an interface out of "lines of text" and not much else. (Curses, you say.)

Font glyphs (especially european ones) can be compactly represented in 6x8 pixel blocks, or even 5x7 if you always assume a 'spacer' pixel between them. 8x8 might sound neater, but the aspect ratio of 6x8 glyphs is a lot nicer. 8x8 fonts look very 'fat', and fit less per line on small screens.

If we assume our screen is made of a grid of 6x8 pixel blocks, each one of which might contain a character, then we vastly simplify our data load from say 320x256x3 bytes (RGB pixels) to 53x32x2 bytes (assuming one character glyph and 'style' byte per cell)

Thus, keeping a coloured fixed-font grid would require 3.3K of memory. Still too much.

Wow. We can't even keep a full-screen character grid. Even the cheapest microcomputer of the 80's could do that.

We make another assumption: on most of our 'menu based' screen, there are going to be lists of things. "lists" by definition are a bunch of lines that have a similar format. If they have the same general format, but different 'drop-in' values, then that's essentially good old

printf().

In the screenshots shown at the top, you can squint and notice that most screens only have two 'line formats' - the header line, and the bulk list lines. (empty lines not counted) and in fact the classes that back these screens have a very simple 'redraw' handler which tests if the row is <1 (in which case it draw the header) or >1 (in which case it subtracts two, adds the page offset, and draws that item number)

These classes are not filled with data structures that are parsed by an outside framework - they are full of if/then and case statements that hard-code the screen layout into compiled instructions - the fastest possible way to render.

The RasterPager class is the base class that each screen is built from. (What might be called a 'screen' is internally referred to as a Pager, because it 'knows how to draw a page'.)

Pagers are asked by the render manager to draw RasterSpan objects which are essentially defined as a sequential span of characters on a single text line, plus some raster context. "line 10, chars 8-17" is a typical span.

The draw manager has a list of two bytes for each line to record the 'invalid' span. (how much we need to redraw next time - which may be nothing) If the manager is asked to 'invalidate' more spans on a line which already has one, the existing span is extended to include those characters as well.

When the draw manager is given a 'time slice' to spend on rendering pixels, it finds the next line which has a span that needs to be drawn, and asks the Pager to redraw it.

The draw manager has no idea how the span is going to be painted - it might have character glyphs drawn into it, or just pretty patterns - it just knows which parts of which lines are waiting to be done. When it comes time to do the job, the draw manager passes the span request on to the Pager class.

So when the Pager gets the request to draw parts of 'line 0' (for example) it can resolve this in any way it likes. Case statement, array lookup, random number generator, whatever. In many ways, the Pager classes are like HTML 'stylesheet' classes; they mostly contain formatting information.

Because we are interested in rendering lines of coloured text, the RasterPager class has a method called format_span() which works very similarly to printf() in that it has a fixed "format string" parameter (compactly stored in program flash memory) and a set of variable parameters in an array.

format_span() is written to efficiently render sub-spans of the entire line - it can efficiently skip over parts that aren't involved, and doesn't buffer. It can extract single digits from the middle of decimal-formatted and aligned numeric values, and use single parameters multiple times in multiple ways, as decimal values, style indexes, or string selectors.

Here's the top two line definitions used by the SignalPager screen shown. (there's a debug footer I've omitted) It looks bulky in source, but the first string is only 14 bytes long (compiled) and the second is 26 bytes.

// title line

PROGMEM prog_uint8_t SignalPager_title_line[] = {

// fragment metadata

RasterPager::Span | 6,

RasterPager::Span | 9 | RasterPager::Last,

// fragment instructions

RasterPager::Lit | 1, ' ',

RasterPager::Lit | 9, 'X','-','D','R','O','I','D',' ','1'

};

// list line

PROGMEM prog_uint8_t SignalPager_signal_line[] = {

// fragment metadata

RasterPager::Style | RasterPager::Vector | 5, RasterPager::Span | 2,

RasterPager::Style | 6, RasterPager::Span | 4,

RasterPager::Style | 3, RasterPager::Span | 2,

RasterPager::Style | 0, RasterPager::Span | 7,

RasterPager::Style | 3, RasterPager::Span | 5,

RasterPager::Style | RasterPager::Vector | 3, RasterPager::Span | 1 | RasterPager::Last,

// fragment instructions

RasterPager::Lit | 2, '<',' ',

RasterPager::Dec | 0,

RasterPager::Lit | 2, ' ','(',

RasterPager::Dec | 1,

RasterPager::Lit | 3, ' ',')',' ',

RasterPager::Lit | 1, '>'

};

Here's the overloaded draw_span() method on that class, which selects which of those formats to use (based on the line) and then stuffs the vector with the relevant parameters, and then calls format_span().

void draw_span(RasterSpan * s) {

int row = s->row;

prog_uint8_t * line_format = 0;

int line_vector[8];

if(row==0) {

line_format = SignalPager_title_line;

} else if(row==1) {

} else if(row<18) {

int line = row + scroll_y*16 - header;

line_format = SignalPager_signal_line;

line_vector[0] = line;

line_vector[1] = droid->signal_value[line];

line_vector[2] = droid->fs.token_size(line&0x7F);

line_vector[3] = line_vector[2] ? 2 : 3;

line_vector[4] = droid->fs.token_size((line&0x3F)|0x80);

line_vector[5] = (line<64) ? (line_vector[4] ? 2 : 3) : 7;

}

format_span(s, line_format, line_vector);

}

Note that apart from the function calls to obtain the parameter values, (which we have to do anyway) the code essentially consists of stuffing a local fixed array with values (very cheap) and then calling one method. Remember that function calls have overhead, and can incur anywhere from a dozen to hundreds of bytes of compiled program code. Calling the raster equivalent of drawText() and setColor() for each parameter directly would clearly take more code bytes - the less calls we need to make, the better. One is pretty minimal. One shared is even better.

The chain now goes: the RasterDroid draw manager knows which bits of which lines to redraw, so it incrementally calls the pager draw_span() for each one, and the pager passes that span request (along with the appropriate line definition and looked-up parameters) over to format_span(), which then draws individual font symbols to the raster device using draw_text() as needed. Whew!

This is how we decompress the instruction 'redraw span 8-10 on line 3' into pixels which hit the screen. Not with data structures - because they don't fit in RAM - but with pure code. Our program flash budget is much bigger, so we depend on that instead. Also, by compiling the code we create the fastest possible execution path, optimized for each 'screen' while sharing the common 'drawing primitive' they all use.

This is all totally backwards, when compared with how a UI toolkit like Windows or Aqua or GTK does things - with abstract widgets. But abstraction is a luxury that, on our memory budget, we can't afford. Knowing our context means abandoning polymorphism, to some degree, by "hardcoding magic numbers", or in this case; format strings and click positions.

Although... since the code is so structured, there's no reason why a 'widget layout' system couldn't be created which code-generates these classes. That system could be full of interesting widget code that aligns columns or draws ASCII art, but that can all be done pre-compile.